With the hype around AI reaching new heights, the potential to transform digital banking is bigger than ever. In particular, integrating Generative Artificial Intelligence (AI) into digital banking creates many exciting possibilities. From streamlining customer service to improving accessibility, AI-based solutions can greatly transform the way financial institutions interact with their customers.

However, it also raises big concerns around compliance and privacy. As more financial institutions turn to AI to improve customer experience, they must continue to meet strict regulatory standards and protect user data with responsible AI practices.

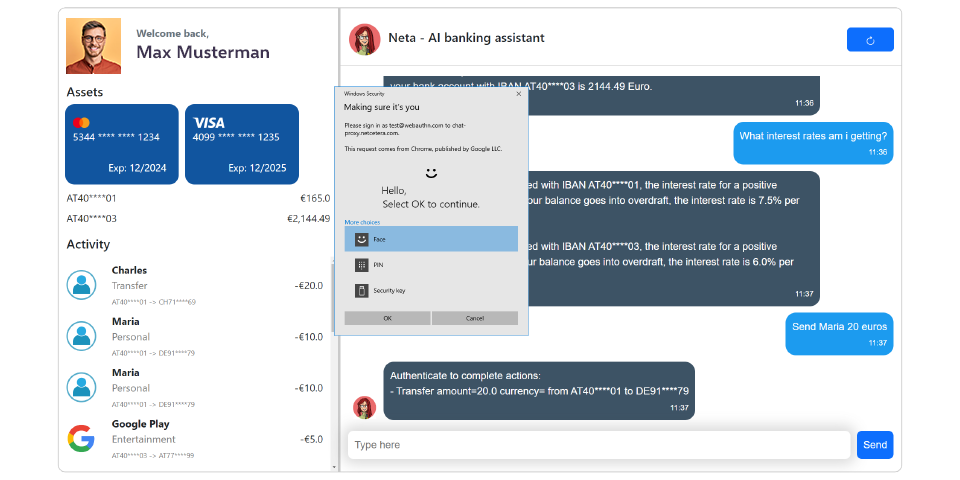

In this article, we’ll explore the challenges of making AI-based applications compliant and private. We’ll focus on chatbots used in the banking sector, where the need for security and compliance is exceptionally high.