Behind-the-scenes

The speech-based timetable app is essentially comprised of four components. The first component is the mobile app itself, which is installed on the smartphone and interacts with the user. The mobile app is responsible for speech recognition and for answering user questions or displaying results, in our case timetable information. The language is transcribed using Google’s Speech API. Android's TextToSpeech takes care of answering.

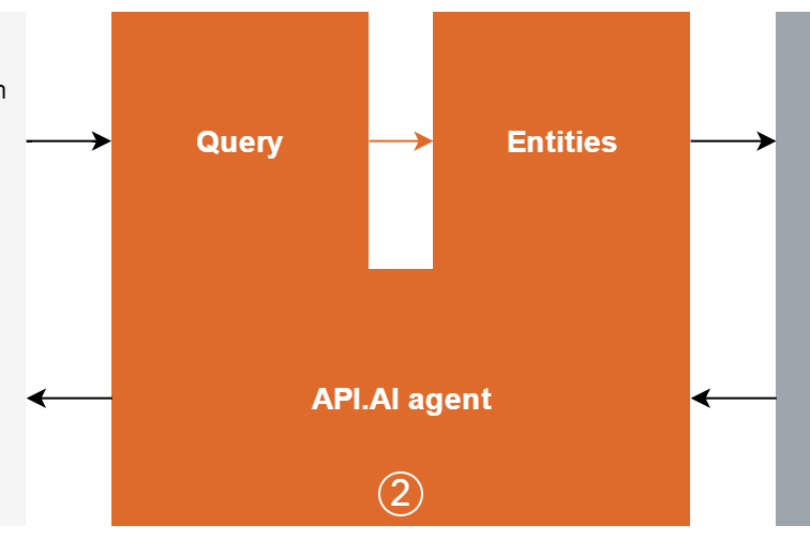

The actual analysis of the query to determine departure and arrival locations, for example, from the transcribed sentence "When is the next train from Zurich to Bern?", is not done by the mobile app itself, but rather by a second component that runs completely in the cloud. For this second component, we are currently using Google's platform API.AI. There are several other platforms that offer similar functionality, such as Watson from IBM or LUIS by Microsoft.

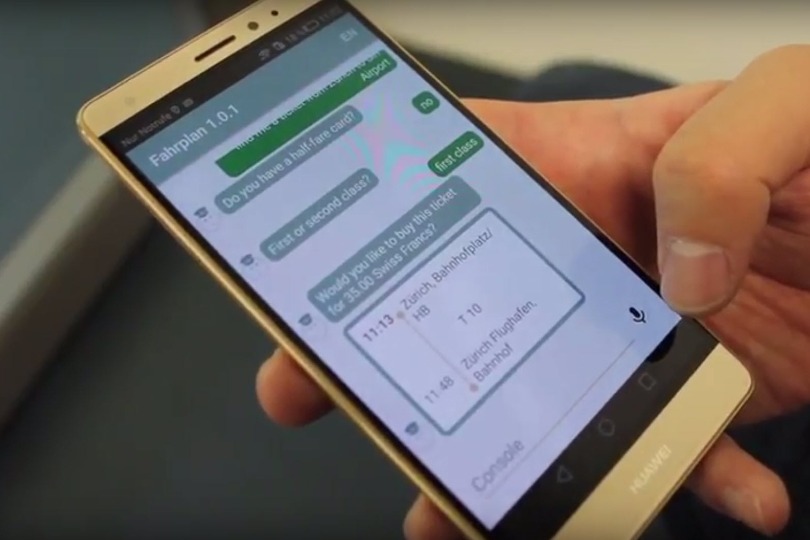

Within API.AI, one defines a so-called agent, which takes charge of analyzing the transcribed text, which means identifying the different entities - railway stops in our case. The agent can be trained to identify entities in a variety of different natural sentences. This makes it possible not only to correctly recognize "from Bern to Zurich", but also more complex sentences such as "Hello, I have to be in Zurich by 7 p.m. When do I need to catch the train in Bern?" The user shouldn't feel forced to adapt to the device. The goal is to facilitate a conversation with the smartphone that is as natural as possible.

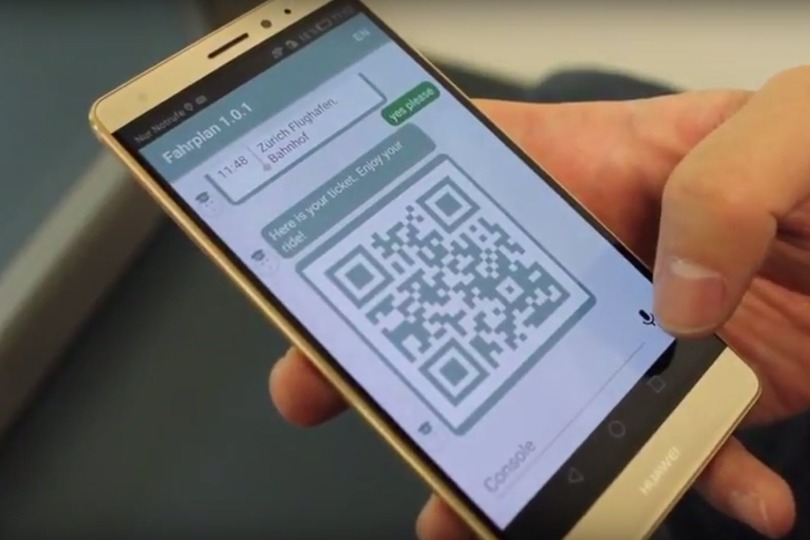

Once we have determined the departure and arrival locations, we are ready to search for corresponding timetable information. We use Opendata Transport, yet another component, for this purpose. Since API.AI and Opendata Transport cannot communicate with each other directly, we have introduced a third component as a middle layer between API.AI and Opendata Transport. This third component is a Java-based web application we developed ourselves that also operates in the cloud. In the beginning, our web application was only responsible for mapping between API.AI and Opendata Transport and generating answers that were as natural as possible in text form. It now stores the conversations and supplies additional context information.

As already mentioned, Opendata Transport is the fourth component in our architecture that delivers the necessary routing or timetable information. The use of Opendata Transport is interchangeable in our web application so that one could switch to an alternate provider for timetable information if necessary.

Speech recognition and text-to-speech function at least partially without an Internet connection. However, an Internet connection is generally necessary for applications that interact with other providers/interfaces or rely on up-to-date information.