When implementing any new technology, organizations should seriously consider security and compliance implications. Particularly in industries like banking and healthcare, ensuring that AI systems are secure and compliant is paramount.

One way to address these concerns is to use an open-source AI model. Unlike proprietary systems such as ChatGPT, open-source LLMs are transparent about their training data and underlying algorithms (for example, how data is processed). Additionally, open-source models can be hosted within an organization’s infrastructure, allowing for greater control and customization to ensure it aligns with the organization’s security and compliance requirements.

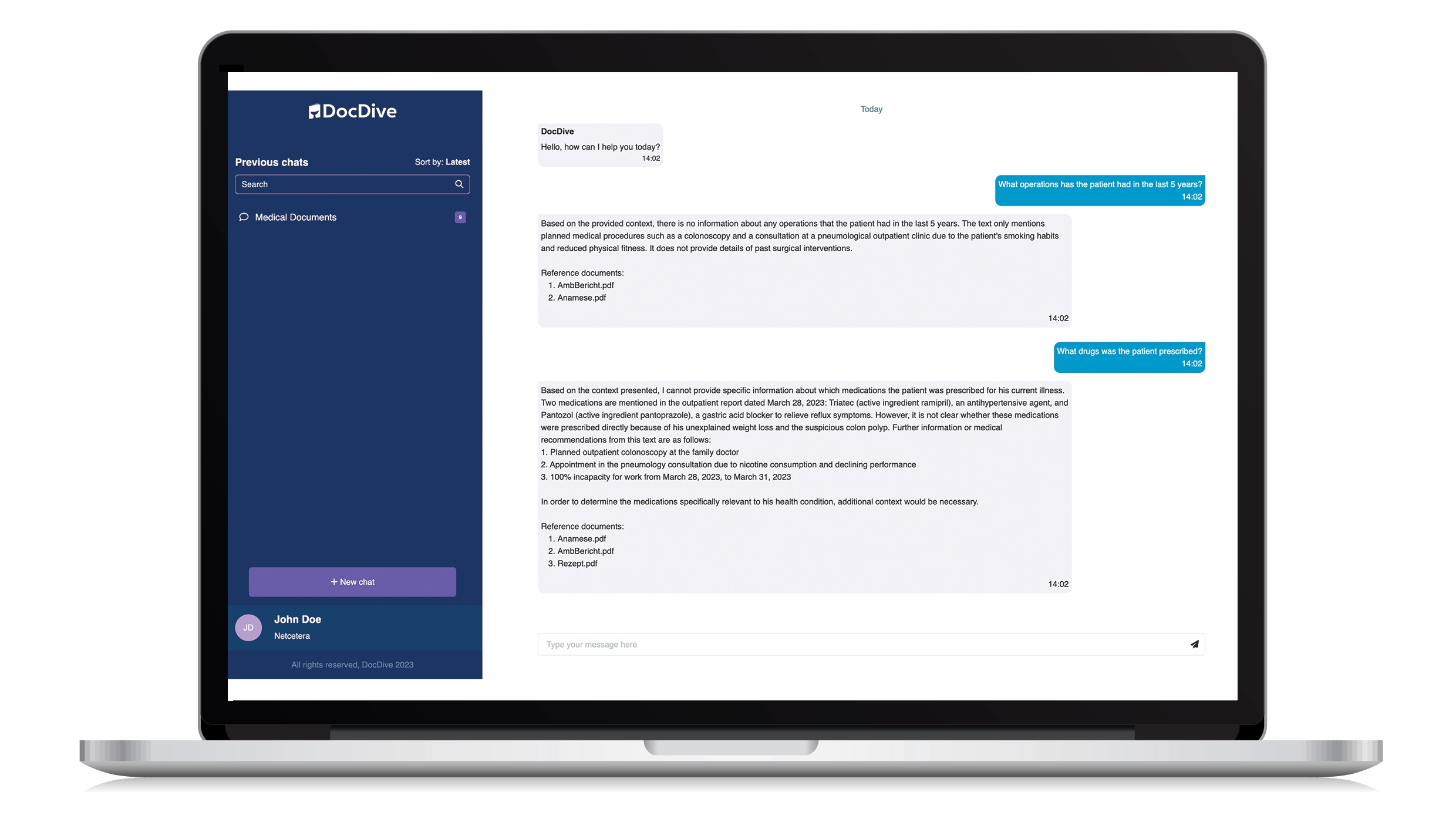

For example, a healthcare provider could use an open-source LLM trained on medical data and host it on their servers. This would ensure that sensitive patient information remains within the provider’s ecosystem, minimizing the risk of data breaches and ensuring compliance with regulations like HIPAA.

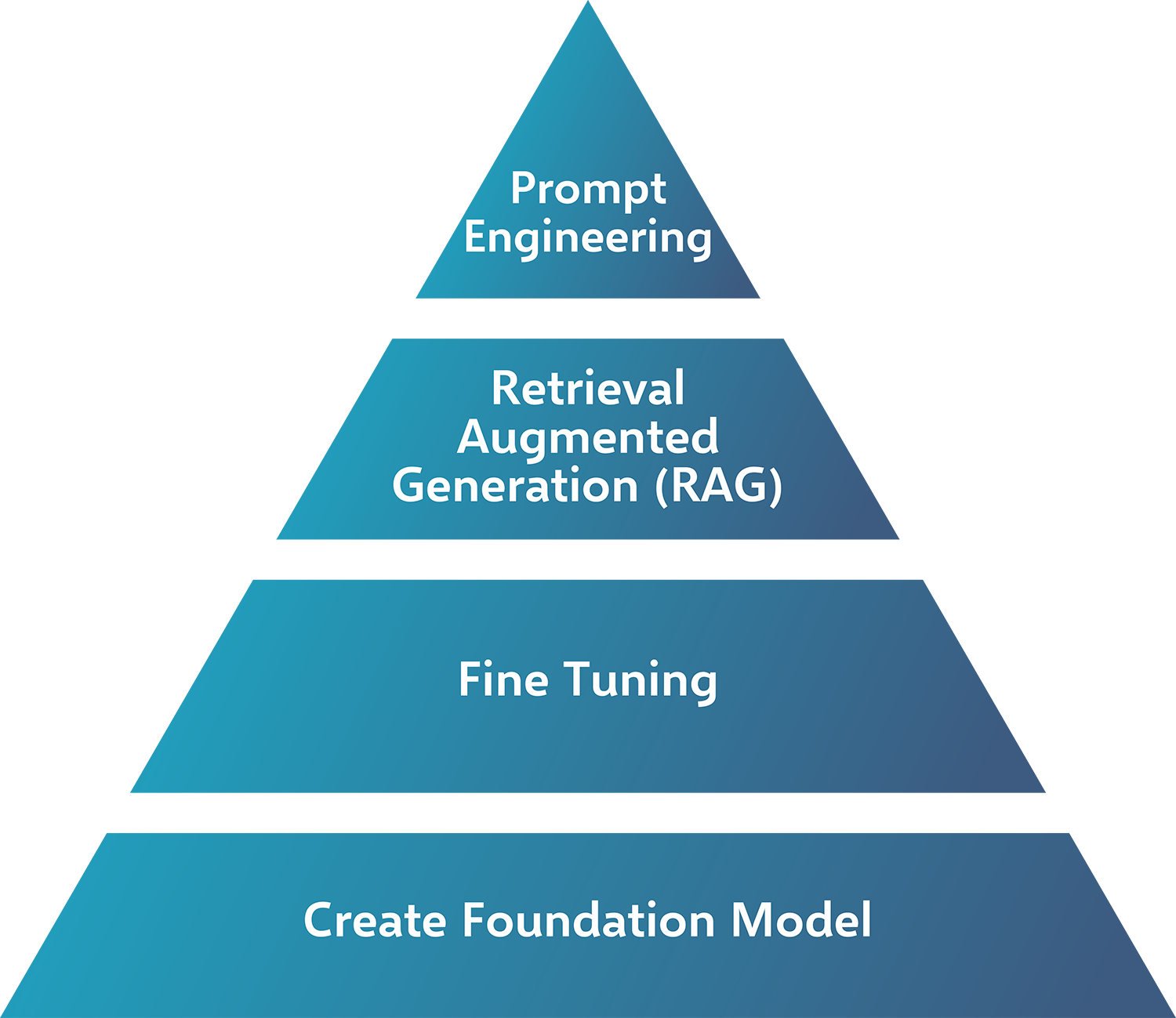

When evaluating whether to use an open-source or proprietary LLM, organizations should first consider their specific needs and priorities:

Open-source models:

Open-source models:

May be the better choice if transparency and security are of high importance. These types of models allow organizations to fine-tune the model using their own data, ensuring it behaves accordingly.

Proprietary models:

Proprietary models:

May be the better choice if speed and general versatility are most important. These models often run in the cloud, sharing limited details about their training data and how they process an organization’s data. Proprietary models typically don’t perform as well as customized open-source models due to a lack of fine-tuning options in many different domains and downstream tasks.

Ultimately, organizations should consult with AI experts to determine the most suitable approach for their specific goals and requirements.

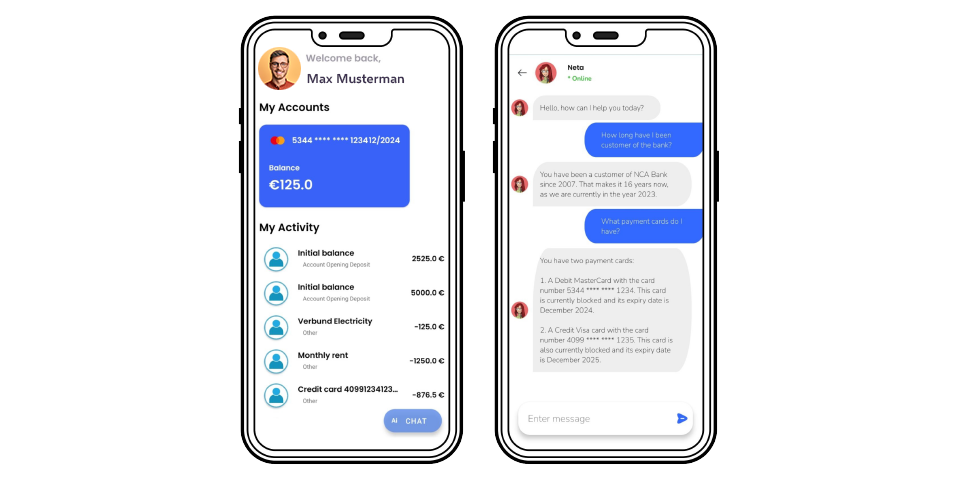

AI engines are decision support engines, and the automation level can be defined case by case. While AI can automate many tasks and provide valuable insights, in some cases, it’s important to have human experts review and validate the outputs manually to ensure accuracy and compliance. For example, an LLM may flag a banking transaction as potentially fraudulent, but a human analyst will need to review the flagged transaction to determine whether it is indeed fraudulent or a false positive. Additionally, a human expert will need to handle any edge cases the AI may not cover.

For this reason, at G+D Netcetera, we’ve designed our AI solutions with security and compliance at the forefront to ensure that sensitive data remains secure. Our experts work with our clients, including banking and healthcare organizations, to understand their specific security and compliance needs and implement AI solutions that adhere to relevant regulations and standards.